| Title: | General Bivariate Copula Theory and Many Utility Functions |

| Version: | 2.2.11 |

| Date: | 2025-11-02 |

| Description: | Extensive functions for bivariate copula (bicopula) computations and related operations for bicopula theory. The lower, upper, product, and select other bicopula are implemented along with operations including the diagonal, survival copula, dual of a copula, co-copula, and numerical bicopula density. Level sets, horizontal and vertical sections are supported. Numerical derivatives and inverses of a bicopula are provided through which simulation is implemented. Bicopula composition, convex combination, asymmetry extension, and products also are provided. Support extends to the Kendall Function as well as the Lmoments thereof. Kendall Tau, Spearman Rho and Footrule, Gini Gamma, Blomqvist Beta, Hoeffding Phi, Schweizer- Wolff Sigma, tail dependency, tail order, skewness, and bivariate Lmoments are implemented, and positive/negative quadrant dependency, left (right) increasing (decreasing) are available. Other features include Kullback-Leibler Divergence, Vuong Procedure, spectral measure, and Lcomoments for fit and inference, Lcomoment ratio diagrams, maximum likelihood, and AIC, BIC, and RMSE for goodness-of-fit. |

| Maintainer: | William Asquith <william.asquith@ttu.edu> |

| Repository: | CRAN |

| Depends: | R (≥ 4.0.0) |

| Imports: | lmomco, mvtnorm, randtoolbox |

| Suggests: | copula |

| License: | GPL-2 |

| NeedsCompilation: | no |

| Packaged: | 2025-11-02 21:30:13 UTC; wasquith |

| Author: | William Asquith |

| Date/Publication: | 2025-11-03 06:10:15 UTC |

Basic Theoretical Copula, Empirical Copula, and Various Utility Functions

Description

The copBasic package is oriented around bivariate copula theory and mathematical operations closely follow the recommended texts of Nelsen (2006) and Joe (2014) as well as select other references. Another recommended text is Salvadori et al. (2007) and Hofert et al. (2018) and are cited herein, but about half of that excellent book concerns univariate applications. The primal objective of copBasic is to provide a study of numerous results shown by authoritative texts on copulas. It is intended that the package will help other copula students in self study, potential course work, and applied circumstances.

Notes on copulas that are supported. The author has focused on pedagogical aspects of copulas, and this package is a diary of sorts that began in fall 2008. Originally, the author did not implement many copulas in the copBasic in order to deliberately avoid redundancy to that support such as it exists on the R CRAN. Though as time has progressed, other copulas have been added based on needs of a user community, needs to show some concepts in the general theory, or test algorithms. For example, the Clayton copula (CLcop) was a late arriving addition the package (c.2017), which was added to assist a specific user; the Frank copula (FRcop was added in December 2024 because of an inquiry on using copBasic for vine copula (see vine example under FRcop). The Normal (NORMcop), Marshall–Olkin (MOcop), and t-Student copulas (Tcop) were added in summer 2025 because of an inquiry on meanings and interpretive properties of L-comoments.

The language and vocabulary of copulas are formidable even within the realm of bivariate or bicopula as design basis for the package. Often “vocabulary” words are often emphasized in italics, which is used extensively and usually near the opening of function-by-function documentation to identify vocabulary words, such as survival copula (see surCOP). This syntax tries to mimic and accentuate the terminology in Nelsen (2006), Joe (2014), and generally most other texts. Italics are used to draw connections between concepts.

In conjunction with the summary of functions in copBasic-package, the extensive cross referencing to functions and expansive keyword indexing should be beneficial. The author had no experience with copulas prior to a chance happening upon Nelsen (2006) in c.2008. The copBasic package is a personal tour de force in self-guided learning. Over time, this package and user's manual have been helpful to many others.

Helpful Navigation of Copulas Implemented in the copBasic Package

Some entry points to the copulas implemented are listed in the Table of Copulas:

| Name | Symbol | Function | Concept |

| Lower-bounds copula | \mathbf{W}(u,v) | W | copula |

| Independence copula | \mathbf{\Pi}(u,v) | P | copula |

| Upper-bounds copula | \mathbf{M}(u,v) | M | copula |

| Fréchet Family copula | \mathbf{FF}(u,v) | FRECHETcop | copula |

| Ali–Mikhail–Haq copula | \mathbf{AMH}(u,v) | AMHcop | copula |

| Clayton copula | \mathbf{CL}(u,v) | CLcop | copula |

| Copula of uniform circle | \mathbf{CIRC}(u,v) | CIRCcop | copula |

| Farlie–Gumbel–Morgenstern (generalized) | \mathbf{FGM}(u,v) | FGMcop | copula |

| Frank | \mathbf{FR}(u,v) | FRcop | copula |

| Galambos copula | \mathbf{GL}(u,v) | GLcop | copula |

| Gumbel–Hougaard copula | \mathbf{GH}(u,v) | GHcop | copula |

| Hairpin copula | \mathbf{HAIRPIN(u,v)} | HAIRPINcop | copula |

| Hüsler–Reiss copula | \mathbf{HR}(u,v) | HRcop | copula |

| Joe B5 (the “Joe”) copula | \mathbf{B5}(u,v) | JOcopB5 | copula |

| Joe–Ma copula | \mathbf{JOMA}(u,v) | JOMAcop | copula |

| Marshall–Olkin copula | \mathbf{MO}(u,v) | MOcop | copula |

| Nelsen eq.4-2-12 copula | \mathbf{N4212cop}(u,v) | N4212cop | copula |

| Nelsen eq.4-2-20 copula | \mathbf{N4220cop}(u,v) | N4220cop | copula |

| Nelsen eq.4-2-20 copula (reflected) | \mathbf{rN4220cop}(u,v) | rN4220cop | copula |

Normal copula (refer also to Tcop) | \mathbf{NORM}(u,v) | NORMcop | copula |

| Ordinal Sums by Copula | \mathbf{C}_\mathcal{J}(u,v) | ORDSUMcop | copula |

| Ordinal Sums by W-Copula | \mathbf{C}_\mathcal{J}(u,v) | ORDSUWcop | copula |

| Pareto copula | \mathbf{PA}(u,v) | PAcop | copula |

| Plackett copula | \mathbf{PL}(u,v) | PLcop | copula |

| PSP copula | \mathbf{PSP}(u,v) | PSP | copula |

| Raftery copula | \mathbf{RF}(u,v) | RFcop | copula |

| Rayleigh copula | \mathbf{RAY}(u,v) | RAYcop | copula |

| g-EV copula (Gaussian extreme value) | \mathbf{gEV}(u,v) | gEVcop | copula |

| t-EV copula (t-distribution extreme value) | \mathbf{tEV}(u,v) | tEVcop | copula |

t-Student copula (refer also to NORMcop) | \mathbf{T}(u,v) | Tcop | copula |

A few comments on notation herein are needed. A bold math typeface is used to represent a copula function or family such as \mathbf{\Pi} (see P) for the independence copula. The syntax \mathcal{R}\times\mathcal{R} \equiv \mathcal{R}^2 denotes the orthogonal domain of two real numbers, and [0,1]\times [0,1] \equiv \mathcal{I}\times\mathcal{I} \equiv \mathcal{I}^2 denotes the orthogonal domain on the unit square of probabilities. Limits of integration [0,1] or [0,1]^2 involving copulas are thus shown as \mathcal{I} and \mathcal{I}^2, respectively.

Random variables X and Y respectively denote the horizontal and vertical directions in \mathcal{R}^2. Their probabilistic counterparts are uniformly distributed random variables on [0, 1], are respectively denoted as U and V, and necessarily also are the respective directions in \mathcal{I}^2 (U denotes the horizontal, V denotes the vertical). Often realizations of these random variables are respectively x and y for X and Y and u and v for U and V. There is an obvious difference between nonexceedance probability F and its complement, which is exceedance probability defined as 1-F. Both u and v herein are in nonexceedance probability. Arguments to many functions herein are u = u and v = v and are almost exclusively nonexceedance but there are instances for which the probability arguments are u = 1 - u = u' and v = 1 - v = v'.

Bivariate Association — Several of the functions listed above are measures of bivariate association. Two of the measures (Kendall Tau, tauCOP; Spearman Rho, rhoCOP) are widely known. R provides native support for their sample estimation of course (stats::cor()), but each function can be used to call the cor() function for parallelism to the other measures of this package. The other measures (Blomqvist Beta, Gini Gamma, Hoeffding Phi, Schweizer–Wolff Sigma, Spearman Footrule) support sample estimation by specially formed calls to their respective functions: blomCOP, giniCOP, hoefCOP, wolfCOP, and footCOP. Gini Gamma (giniCOP) documentation (also joeskewCOP) shows extensive use of theoretical and sample computations for these and other functions.

Helpful Navigation of the copBasic Package

Some other entry points into the package are listed in the following table:

| Name | Symbol | Function | Concept |

| Copula | \mathbf{C}(u,v) | COP | copula theory |

\cdots | \mathbf{C}(u,v) | COP(..., reflect=*) | reflection |

\cdots | \mathbf{C}(u,v) | COP(..., reflect=*) | rotation |

| Survival copula | \hat{\mathbf{C}}(u',v') | surCOP | copula theory |

| Joint survival function | \overline{\mathbf{C}}(u,v) | surfuncCOP | copula theory |

| Co-copula | \mathbf{C}^\star(u',v') | coCOP | copula theory |

| Dual of a copula | \tilde{\mathbf{C}}(u,v) | duCOP | copula theory |

| Primary copula diagonal | \delta(t) | diagCOP | copula theory |

| Secondary copula diagonal | \delta^\star(t) | diagCOP | copula theory |

| Inverse copula diagonal | \delta^{(-1)}(f) | diagCOPatf | copula theory |

| Joint probability | {-}{-} | jointCOP | copula theory |

| Bivariate L-moments | \delta^{[\ldots]}_{k;\mathbf{C}} | bilmoms and lcomCOP | bivariate moments |

| Bivariate L-comoments | \tau^{[\ldots]}_{k;\mathbf{C}} | bilmoms and lcomCOP | bivariate moments |

| Blomqvist Beta | \beta_\mathbf{C} | blomCOP | bivariate association |

| Gini Gamma | \gamma_\mathbf{C} | giniCOP | bivariate association |

| Hoeffding Phi | \Phi_\mathbf{C} | hoefCOP | bivariate association |

| Nu-Skew | \nu_\mathbf{C} | nuskewCOP | bivariate moments |

| Nu-Star (skew) | \nu^\star_\mathbf{C} | nustarCOP | bivariate moments |

| Lp distance to independence | \Phi_\mathbf{C} \rightarrow L_p | LpCOP | bivariate association |

| Permutation-Mu | \mu_{\infty\mathbf{C}}^\mathrm{permsym} | LzCOPpermsym | permutation asymmetry |

| Kendall Tau | \tau_\mathbf{C} | tauCOP | bivariate association |

| Kendall Measure | K_\mathbf{C}(z) | kmeasCOP | copula theory |

| Kendall Function | F_K(z) | kfuncCOP | copula theory |

Kendall Function (d-\mathbf{P}) | F_K(z; \mathbf{P}_d) | kfuncCOP_Pd | copula theory |

| Inverse Kendall Function | F_K^{(-1)}(z) | kfuncCOPinv | copula theory |

An L-moment of F_K(z) | \lambda_r(F_K) | kfuncCOPlmom | L-moment theory |

L-moments of F_K(z) | \lambda_r(F_K) | kfuncCOPlmoms | L-moment theory |

| Semi-correlations (negatives) | \rho_N^{-}(a) | semicorCOP | bivariate tail association |

| Semi-correlations (positives) | \rho_N^{+}(a) | semicorCOP | bivariate tail association |

| Spearman Footrule | \psi_\mathbf{C} | footCOP | bivariate association |

| Spearman Rho | \rho_\mathbf{C} | rhoCOP | bivariate association |

| Schweizer–Wolff Sigma | \sigma_\mathbf{C} | wolfCOP | bivariate association |

| Density of a copula | c(u,v) | densityCOP | copula density |

| Density visualization | {-}{-} | densityCOPplot | copula density |

| Empirical copula | \mathbf{C}_n(u,v) | EMPIRcop | copula |

| Empirical simulation | {-}{-} | EMPIRsim | copula simulation |

| Empirical simulation | {-}{-} | EMPIRsimv | copula simulation |

| Empirical copulatic surface | {-}{-} | EMPIRgrid | copulatic surface |

| Parametric copulatic surface | {-}{-} | gridCOP | copulatic surface |

| Parametric simulation | {-}{-} | simCOP or rCOP | copula simulation |

| Parametric simulation | {-}{-} | simCOPmicro | copula simulation |

| Maximum likelihood | \mathcal{L}(\Theta_d) | mleCOP | copula fitting |

| Akaike information criterion | \mathrm{AIC}_\mathbf{C} | aicCOP | goodness-of-fit |

| Bayesian information crit. | \mathrm{BIC}_\mathbf{C} | bicCOP | goodness-of-fit |

| Root mean square error | \mathrm{RMSE}_\mathbf{C} | rmseCOP | goodness-of-fit |

| Another goodness-of-fit | T_n | statTn | goodness-of-fit |

Goodness-of-fit — Concerning goodness-of-fit and although not quite the same as copula properties (such as “correlation”) per se as the coefficients aforementioned in the prior paragraph, three goodness-of-fit metrics of a copula compared to the empirical copula, which are all based the mean square error (MSE), are aicCOP, bicCOP, and rmseCOP. This triad of functions is useful for making decisions on whether a copula is more favorable than another to a given dataset. However, because they are genetically related by using MSE and if these are used for copula fitting by minimization, the fits will be identical. A statement of “not quite the same” is made because the previously described copula properties are generally defined as types of deviations from other copulas (such as P). Another goodness-of-fit statistic is statTn, which is based on magnitude summation of fitted copula difference from the empirical copula. These four (aicCOP, bicCOP, rmseCOP, and statTn) collectively are relative simple and readily understood measures. These bulk sample statistics are useful, but generally thought to not capture the nuances of tail behavior (semicorCOP and taildepCOP might be useful).

Bivariate Skewness and L-comoments — Bivariate skewness measures are supported in the functions joeskewCOP (nuskewCOP and nustarCOP) and uvlmoms (uvskew). Extensive discussion and example computations of bivariate skewness are provided in the joeskewCOP documentation. Lastly, so-called bivariate L-moments and bivariate L-comoments of a copula are directly computable in bilmoms (though that function using Monte Carlo integration is deprecated) and lcomCOP (direct numerical integration). Function lcomCOP is the theoretical counterpart to the sample L-comoments provided in the lmomco package by lmomco::lcomoms2() function. Parameter estimation by L-comoments is demonstrated for the MOcop under its Examples and for a potentially complex composite1COP within Examples of lcomCOP. Demonstrations of L-comoment ratio diagrams and construction methods for various copula is seen in Examples within LCOMDIA_ManyCops (a collection of vary many 1-parameter copula driven by Spearman Rho, rhoCOP), LCOMDIA_GH2cop (2-parameter Gumbel–Hougaard copula, GHcop), LCOMDIA_GH3cop (3-parameter Gumbel–Hougaard copula, GHcop), and ORDSUMcop (ordinal sums of M-copula).

Conditional Simulation — Random simulation methods by several functions are listed in the previous table (simCOPmicro, simCOP). The copBasic package explicitly uses only conditional simulation also known as the conditional distribution method for random variate generation following Nelsen (2006, pp. 40–41). The numerical derivatives (derCOP and derCOP2) and their inversions (derCOPinv and derCOPinv2) (next table) represent the foundation of the conditional simulation. There are other methods in the literature and available in other R packages, and a comparison of some methods is made in the Examples section of the Gumbel–Hougaard copula (GHcop).

Several functions in copBasic make the distinction between V with respect to (wrt) U and U wrt V, and a guide for the nomenclature involving wrt distinctions is listed in the following table:

| Name | Symbol | Function | Concept |

| Copula inversion | V wrt U | COPinv | copula operator |

| Copula inversion | U wrt V | COPinv2 | copula operator |

| Copula derivative | \delta \mathbf{C}/\delta u | derCOP | copula operator |

| Copula derivative | \delta \mathbf{C}/\delta v | derCOP2 | copula operator |

| Copula derivative inversion | V wrt U | derCOPinv | copula operator |

| Copula derivative inversion | U wrt V | derCOPinv2 | copula operator |

| Joint curves | t \mapsto \mathbf{C}(u=U, v) | joint.curvesCOP | copula theory |

| Joint curves | t \mapsto \mathbf{C}(u, v=V) | joint.curvesCOP2 | copula theory |

| Level curves | t \mapsto \mathbf{C}(u=U, v) | level.curvesCOP | copula theory |

| Level curves | t \mapsto \mathbf{C}(u, v=V) | level.curvesCOP2 | copula theory |

| Level set | V wrt U | level.setCOP | copula theory |

| Level set | U wrt V | level.setCOP2 | copula theory |

| Median regression | V wrt U | med.regressCOP | copula theory |

| Median regression | U wrt V | med.regressCOP2 | copula theory |

| Quantile regression | V wrt U | qua.regressCOP | copula theory |

| Quantile regression | U wrt V | qua.regressCOP2 | copula theory |

| Copula section | t \mapsto \mathbf{C}(t,a) | sectionCOP | copula theory |

| Copula section | t \mapsto \mathbf{C}(a,t) | sectionCOP | copula theory |

The previous two tables do not list all of the myriad of special functions to support similar operations on empirical copulas. All empirical copula operators and utilities are prepended with EMPIR in the function name. An additional note concerning package nomenclature is that an appended “2” to a function name indicates U wrt V (e.g. EMPIRgridderinv2 for an inversion of the partial derivatives \delta \mathbf{C}/\delta v across the grid of the empirical copula).

Some additional functions to compute often salient features or characteristics of copulas or bivariate data, including functions for bivariate inference or goodness-of-fit, are listed in the following table:

| Name | Symbol | Function | Concept |

| Left-tail decreasing | V wrt U | isCOP.LTD | bivariate association |

| Left-tail decreasing | U wrt V | isCOP.LTD | bivariate association |

| Right-tail increasing | V wrt U | isCOP.RTI | bivariate association |

| Right-tail increasing | U wrt V | isCOP.RTI | bivariate association |

| Pseudo-polar representation | (\widehat{S},\widehat{W}) | psepolar | extremal dependency |

| Tail concentration function | q_\mathbf{C}(t) | tailconCOP | bivariate tail association |

| Tail (lower) dependency | \lambda^L_\mathbf{C} | taildepCOP | bivariate tail association |

| Tail (upper) dependency | \lambda^U_\mathbf{C} | taildepCOP | bivariate tail association |

| Tail (lower) order | \kappa^L_\mathbf{C} | tailordCOP | bivariate tail association |

| Tail (upper) order | \kappa^U_\mathbf{C} | tailordCOP | bivariate tail association |

| Neg'ly quadrant dependency | NQD | isCOP.PQD | bivariate association |

| Pos'ly quadrant dependency | PQD | isCOP.PQD | bivariate association |

| Permutation symmetry | \mathrm{permsym} | isCOP.permsym | copula symmetry |

| Radial symmetry | \mathrm{radsym} | isCOP.radsym | copula symmetry |

| Skewness (Joe, 2014) | \eta(p; \psi) | uvskew | bivariate skewness |

| Kullback–Leibler Divergence | \mathrm{KL}(f \mid g) | kullCOP | bivariate inference |

| KL sample size | n_{f\!g} | kullCOP | bivariate inference |

| The Vuong Procedure | {-}{-} | vuongCOP | bivariate inference |

| Spectral measure | H(w) | spectralmeas | extremal dependency inference |

| Stable tail dependence | \widehat{l}(x,y) | stabtaildepf | extremal dependency inference |

| L-comoments (samp. distr.) | {-}{-} | lcomCOPpv | experimental bivariate inference |

The Table of Probabilities that follows lists important relations between various joint probability concepts, the copula, nonexceedance probabilities u and v, and exceedance probabilities u' and v'. A compact summary of these probability relations has obvious usefulness. The notation [\ldots, \ldots] is to read as [\ldots \mathrm{\ and\ } \ldots], and the [\ldots \mid \ldots] is to be read as [\ldots \mathrm{\ given\ } \ldots].

| Probability | and | Symbol Convention |

\mathrm{Pr}[\,U \le u, V \le v\,] | = | \mathbf{C}(u,v) — The copula, COP |

\mathrm{Pr}[\,U > u, V > v\,] | = | \hat{\mathbf{C}}(u',v') — The survival copula, surCOP |

\mathrm{Pr}[\,U \le u, V > v\,] | = | u - \mathbf{C}(u,v') |

\mathrm{Pr}[\,U > u, V \le v\,] | = | v - \mathbf{C}(u',v) |

\mathrm{Pr}[\,U \le u \mid V \le v\,] | = | \mathbf{C}(u,v)/v |

\mathrm{Pr}[\,V \le v \mid U \le u\,] | = | \mathbf{C}(u,v)/u |

\mathrm{Pr}[\,U \le u \mid V > v\,] | = | \bigl(u - \mathbf{C}(u,v)\bigr)/(1 - v) |

\mathrm{Pr}[\,V \le v \mid U > u\,] | = | \bigl(v - \mathbf{C}(u,v)\bigr)/(1 - u) |

\mathrm{Pr}[\,U > u \mid V > v\,] | = | \hat{\mathbf{C}}(u',v')/u' = \overline{\mathbf{C}}(u,v)/(1-u) |

\mathrm{Pr}[\,V > v \mid U > u\,] | = | \hat{\mathbf{C}}(u',v')/v' = \overline{\mathbf{C}}(u,v)/(1-v) |

\mathrm{Pr}[\,V \le v \mid U = u\,] | = | \delta \mathbf{C}(u,v)/\delta u — Partial derivative, derCOP |

\mathrm{Pr}[\,U \le u \mid V = v\,] | = | \delta \mathbf{C}(u,v)/\delta v — Partial derivative, derCOP2 |

\mathrm{Pr}[\,U > u \mathrm{\ or\ } V > v\,] | = | \mathbf{C}^\star(u',v') = 1 - \mathbf{C}(u',v') — The co-copula, coCOP |

\mathrm{Pr}[\,U \le u \mathrm{\ or\ } V \le v\,] | = | \tilde{\mathbf{C}}(u,v) = u + v - \mathbf{C}(u,v) — The dual of a copula, duCOP |

E[\,U \mid V = v\,] | = | \int_0^1 (1 - \delta \mathbf{C}(u,v)/\delta v)\,\mathrm{d}u — Expectation of U given V, EuvCOP |

E[\,V \mid U = u\,] | = | \int_0^1 (1 - \delta \mathbf{C}(u,v)/\delta u)\,\mathrm{d}v — Expectation of V given U, EvuCOP |

The function jointCOP has considerable demonstration in its Note section of the joint and and joint or relations shown through simulation and counting scenarios. Also there is a demonstration in the Note section of function duCOP on application of the concepts of joint and conditions, joint or conditions, and importantly joint mutually exclusive or conditions.

Copula Construction Methods

Permutation asymmetry (nonexchangability) can be added to (inserted in) a copula by breveCOP. One, two, or more copulas can be “composited,” “combined,” or “multiplied” in interesting ways to create highly unique bivariate relations and as a result, complex dependence structures can be formed. Such operations can often insert permutation asymmetry as well. To such an end, the package provides three main functions for copula composition: composite1COP composites a single copula with two compositing parameters (Khoudraji device with independence), composite2COP (Khoudraji device) composites two copulas with two compositing parameters, and composite3COP composites two copulas with four compositing parameters.

Two copulas also can be combined through a weighted convex combination using convex2COP with a single weighting parameter, and even N number of copulas can be combined by weights using convexCOP. So-called “gluing” two copula by a parameter is provided by glueCOP. Multiplication of two copulas to form a third is supported by prod2COP. All eight functions for compositing, combining, or multiplying copulas are compatible with joint probability simulation (simCOP), measures of association (e.g. \rho_\mathbf{C}), and presumably all other copula operations using copBasic features. Finally, ordinal sums of copula are provided by ORDSUMcop and ORDSUWcop as particularly interesting methods of combining copulas.

| No. of copulas | Combining Parameters | Function | Concept |

| 1 | \beta | breveCOP | adding permuation asymmetry |

| 1 | \alpha, \beta | composite1COP | copula combination |

| 1 | \alpha, \beta | khoudraji1COP | copula combination |

| 1 | \alpha, \beta | khoudrajiPCOP | copula combination |

| 2 | \alpha, \beta | composite2COP | copula combination |

| 2 | \alpha, \beta | khoudraji2COP | copula combination |

| 2 | \alpha, \beta, \kappa, \gamma | composite3COP | copula combination |

| 2 | \alpha, (1-\alpha) | convex2COP | weighted copula combination |

N | \omega_{i \in N} | convexCOP | weighted copula combination |

| 2 | \gamma | glueCOP | gluing of coupla |

| 2 | \bigl(\mathbf{C}_1 \ast \mathbf{C}_2 \bigr) | prod2COP | copula multiplication |

N | \mathbf{C}_{\mathcal{J}i} for \mathcal{J}_{i \in N} partitions | ORDSUMcop | M-ordinal sums of copulas |

N | \mathbf{C}_{\mathcal{J}i} for \mathcal{J}_{i \in N} partitions | ORDSUWcop | W-ordinal sums of copulas |

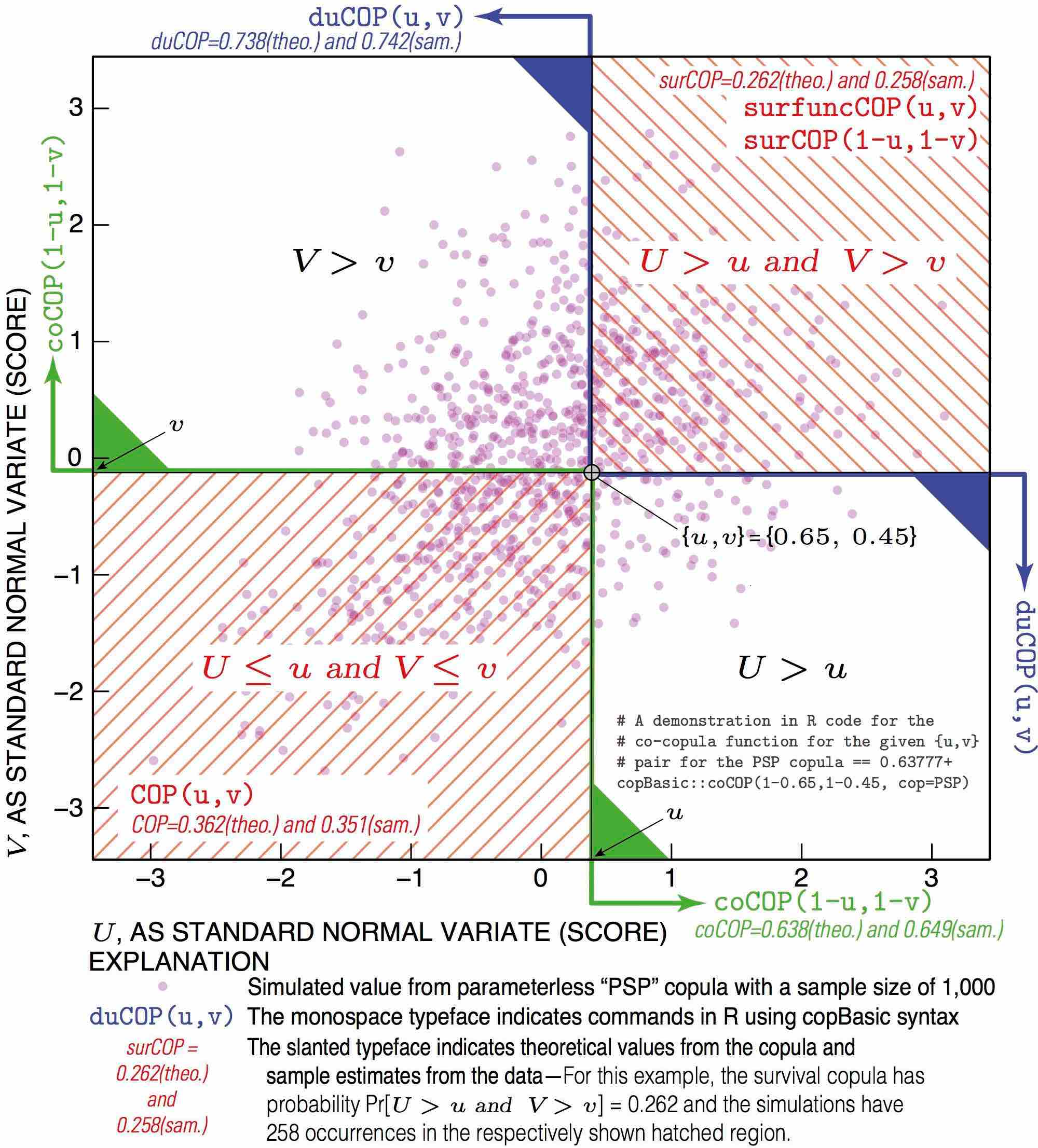

Useful Copula Relations by Visualization

There are a myriad of relations amongst variables computable through copulas, and these were listed in the Table of Probabilities earlier in this documentation. There is a script located in the inst/doc directory of the copBasic sources titled CopulaRelations_BaseFigure_inR.txt. This script demonstrates, using the PSP copula, relations between the copula (COP), survival copula (surCOP), joint survival function of a copula (surfuncCOP), co-copula (coCOP), and dual of a copula function (duCOP). The script performs simulation and manual counts observations meeting various criteria in order to compute their empirical probabilities. The script produces a base figure, which after extending in editing software, is suitable for educational description and is provided at the end of this documentation.

A Review of “Return Periods” using Copulas

Study of natural hazards commonly use as annual return periods T in years, which are defined for a nonexceedance probability q as T = 1/(1-q). In bivariate analysis, there immediately emerge two types of return periods representing T_{q;\,\mathrm{coop}} and T_{q;\,\mathrm{dual}} conditions between nonexceedances of the two hazard sources (random variables) U and V. It is usual in many applications for T to be expressed equivalently as a probability q in common for both variables.

Incidentally, the \mathrm{Pr}[\,U > u \mid V > v\,] and \mathrm{Pr}[\,V > v \mid U > u\,] probabilities also are useful for conditional return period computations following Salvadori et al. (2007, pp. 159–160) but are not further considered here. Also the F_K(w) (Kendall Function or Kendall Measure of a copula) is the core tool for secondary return period computations (see kfuncCOP).

Let the copula \mathbf{C}(u,v; \Theta) for nonexceedances u and v be set for some copula family (formula) by a parameter vector \Theta. The copula family and parameters define the joint coupling (loosely meant the dependency/correlation) between hazards U and V. If “failure” occurs if either or both hazards U and V are at probability q threshold (u = v = 1 - 1/T = q) for T-year return period, then the real return period of failure is defined using either the copula \mathbf{C}(q,q; \Theta) or the co-copula \mathbf{C}^\star(q',q'; \Theta) for exceedance probability q' = 1 - q is

T_{q;\,\mathrm{coop}} = \frac{1}{1 - \mathbf{C}(q, q; \Theta)} = \frac{1}{\mathbf{C}^\star(1-q, 1-q; \Theta)}\mbox{\ and}

T_{q;\,\mathrm{coop}} \equiv \frac{1}{\mathrm{cooperative\ risk}}\mbox{.}

Or in words, the hazard sources collaborate or cooperate to cause failure. If failure occurs, however, if and only if both hazards U and V occur simultaneously (the hazards must “dually work together” or be “conjunctive”), then the real return period is defined using either the dual of a copula (function) \tilde{\mathbf{C}}(q,q; \Theta), the joint survival function \overline{\mathbf{C}}(q,q;\Theta), or survival copula \hat{\mathbf{C}}(q',q'; \Theta) as

T_{q;\,\mathrm{dual}} = \frac{1}{1 - \tilde{\mathbf{C}}(q,q; \Theta)} = \frac{1}{\overline{\mathbf{C}}(q,q;\Theta)} = \frac{1}{\hat{\mathbf{C}}(q',q';\Theta)} \mbox{\ and}

T_{q;\,\mathrm{dual}} \equiv \frac{1}{\mathrm{complement\ of\ dual\ protection}}\mbox{.}

Numerical demonstration is informative. Salvadori et al. (2007, p. 151) show for a Gumbel–Hougaard copula (GHcop) having \Theta = 3.055 and T = 1,000 years (q = 0.999) that T_{q;\,\mathrm{coop}} = 797.1 years and that T_{q;\,\mathrm{dual}} = 1,341.4 years, which means that average return periods between “failures” are

T_{q;\,\mathrm{coop}} \le T \le T_{q;\,\mathrm{dual}}\mbox{\ and thus}

797.1 \le T \le 1314.4\mbox{\ years.}

With the following code, these bounding return-period values are readily computed and verified using the prob2T() function from the lmomco package along with copBasic functions COP (generic functional interface to a copula) and duCOP (dual of a copula):

q <- lmomco::T2prob(1000) lmomco::prob2T( COP(q,q, cop=GHcop, para=3.055)) # 797.110 lmomco::prob2T(duCOP(q,q, cop=GHcop, para=3.055)) # 1341.438

An early source (in 2005) by some of those authors cited on p. 151 of Salvadori et al. (2007; their citation “[67]”) shows T_{q;\,\mathrm{dual}} = 798 years—a rounding error seems to have been committed. Finally and just for reference, a Gumbel–Hougaard copula having \Theta = 3.055 corresponds to an analytical Kendall Tau (see GHcop) of \tau \approx 0.673, which can be verified through numerical integration available from tauCOP as:

tauCOP(cop=GHcop, para=3.055, brute=TRUE) # 0.6726542

Thus, a “better understanding of the statistical characteristics of [multiple hazard sources] requires the study of their joint distribution” (Salvadori et al., 2007, p. 150).

Interaction of copBasic to the Copulas and Features of Other Packages

The copBasic package (c. 2008–11) was not originally intended to be a port of the numerous bivariate copulas or over re-implementation other bivariate copulas available in R, but in support of didactic and teaching efforts. Though as the package passed its 10th year in 2018, the original intent changed to a more generalized purpose as more copula were added and other features by request. It is useful to point out a demonstration showing an implementation of the Gaussian copula from the copula package, which is shown in the Note section of med.regressCOP in a circumstance of ordinary least squares linear regression compared to median regression of a copula as well as prediction limits of both regressions. Another demonstration in context of maximum pseudo-log-likelihood estimation of copula parameters is seen in the Note section mleCOP, and also see “API to the copula package” or “package copula (comparison to)” entries in the Index of this user manual.

Author(s)

William Asquith william.asquith@ttu.edu

References

Cherubini, U., Luciano, E., and Vecchiato, W., 2004, Copula methods in finance: Hoboken, NJ, Wiley, 293 p.

Hernández-Maldonado, V., Díaz-Viera, M., and Erdely, A., 2012, A joint stochastic simulation method using the Bernstein copula as a flexible tool for modeling nonlinear dependence structures between petrophysical properties: Journal of Petroleum Science and Engineering, v. 90–91, pp. 112–123.

Joe, H., 2014, Dependence modeling with copulas: Boca Raton, CRC Press, 462 p.

Hofert, M., Kojadinovic, I., Mächler, M., and Yan, J., 2018, Elements of copula modeling with R: Dordrecht, Netherlands, Springer.

Nelsen, R.B., 2006, An introduction to copulas: New York, Springer, 269 p.

Salvadori, G., De Michele, C., Kottegoda, N.T., and Rosso, R., 2007, Extremes in nature—An approach using copulas: Dordrecht, Netherlands, Springer, Water Science and Technology Library 56, 292 p.

Examples

## Not run:

# Nelsen (2006, p. 75, exer. 3.15b) provides for a nice test of copBasic features.

"mcdurv" <- function(u,v, theta) {

ifelse(u > theta & u < 1-theta & v > theta & v < 1 - theta,

return(M(u,v) - theta), # Upper bounds copula with a shift

return(W(u,v))) # Lower bounds copula

}

"MCDURV" <- function(u,v, para=NULL) {

if(is.null(para)) stop("need theta")

if(para < 0 | para > 0.5) stop("theta ! in [0, 1/2]")

return(asCOP(u, v, f=mcdurv, para))

}

"afunc" <- function(t) { # a sample size = 1,000 hard wired

return(cov(simCOP(n=1000, cop=MCDURV, para=t, ploton=FALSE, points=FALSE))[1,2])

}

set.seed(6234) # setup covariance based on parameter "t" and the "root" parameter

print(uniroot(afunc, c(0, 0.5))) # "t" by simulation = 0.1023742

# Nelsen reports that if theta approx. 0.103 then covariance of U and V is zero.

# So, one will have mutually completely dependent uncorrelated uniform variables!

# Let us check some familiar measures of association:

rhoCOP( cop=MCDURV, para=0.1023742) # Spearman Rho = 0.005854481 (near zero)

tauCOP( cop=MCDURV, para=0.1023742) # Kendall Tau = 0.2648521

wolfCOP(cop=MCDURV, para=0.1023742) # S & W Sigma = 0.4690174 (less familiar)

D <- simCOP(n=1000, cop=MCDURV, para=0.1023742) # Plot mimics Nelsen (2006, fig. 3.11)

# L-comoments (matrices) measure high dimensional variable comovements

# L-comoments (refer also to the lmomco package)

lcomCOP(cop=MCDURV, para=0.1023742)

lmomco::lcomoms2(simCOP(n=1000, cop=MCDURV, para=0.1023742), nmom=5)

lmomco::lcomoms2(simCOP(n=1000, cop=MCDURV, para=0), nmom=5) # Perfect neg. corr.

lmomco::lcomoms2(simCOP(n=1000, cop=MCDURV, para=0.5), nmom=5) # Perfect pos. corr.

# T2 (L-correlation), T3 (L-coskew), T4 (L-cokurtosis), and T5 matrices result. For

# Theta = 0 or 0.5 see the matrix symmetry with a sign change for L-coskew and T5 on

# the off diagonals (offdiags). See unities for T2. See near zero for offdiag terms

# in T2 near zero. But then see that T4 off diagonals are quite different from those

# for Theta 0.1024 relative to 0 or 0.5. As a result, T4 has captured a unique

# property of U vs V.

## End(Not run)

The Ali–Mikhail–Haq Copula

Description

The Ali–Mikhail–Haq copula (Nelsen, 2006, pp. 92–93, 172) is

\mathbf{C}_{\Theta}(u,v) = \mathbf{AMH}(u,v) = \frac{uv}{1 - \Theta(1-u)(1-v)}\mbox{,}

where \Theta \in [-1,+1), where the right boundary,

\Theta = 1, can sometimes be considered valid according to Mächler (2014). The copula \Theta \rightarrow 0 becomes the independence copula (\mathbf{\Pi}(u,v); P), and the parameter \Theta is readily computed from a Kendall Tau (tauCOP) by

\tau_\mathbf{C} = \frac{3\Theta - 2}{3\Theta} -

\frac{2(1-\Theta)^2\log(1-\Theta)}{3\Theta^2}\mbox{,}

and by Spearman Rho (rhoCOP), through Mächler (2014), by

\rho_\mathbf{C} = \sum_{k=1}^\infty \frac{3\Theta^k}{{k + 2 \choose 2}^2}\mbox{.}

The support of \tau_\mathbf{C} is [(5 - 8\log(2))/3, 1/3] \approx [-0.1817258, 0.3333333] and the \rho_\mathbf{C} is [33 - 48\log(2), 4\pi^2 - 39] \approx [-0.2710647, 0.4784176], which shows that this copula has a limited range of dependency. The infinite summation is easier to work with than

Nelsen (2006, p. 172) definition of

\rho_\mathbf{C} = \frac{12(1+\Theta)}{\Theta^2}\mathrm{dilog}(1-\Theta)-

\frac{24(1-\Theta)}{\Theta^2}\log(1-\Theta)-

\frac{3(\Theta+12)}{\Theta}\mbox{,}

where the \mathrm{dilog(x)} is the dilogarithm function defined by

\mathrm{dilog}(x) = \int_1^x \frac{\log(t)}{1-t}\,\mathrm{d}t\mbox{.}

The integral version has more nuances with approaches toward \Theta = 0 and \Theta = 1 than the infinite sum version.

Usage

AMHcop(u, v, para=NULL, rho=NULL, tau=NULL, fit=c("rho", "tau"), ...)

Arguments

u |

Nonexceedance probability |

v |

Nonexceedance probability |

para |

A vector (single element) of parameters—the |

rho |

Optional Spearman Rho from which the parameter will be estimated and presence of |

tau |

Optional Kendall Tau from which the parameter will be estimated; |

fit |

If |

... |

Additional arguments to pass. |

Value

Value(s) for the copula are returned. Otherwise if tau is given, then the \Theta is computed and a list having

para |

The parameter |

tau |

Kendall Tau. |

and if para=NULL and tau=NULL, then the values within u and v are used to compute Kendall Tau and then compute the parameter, and these are returned in the aforementioned list.

Note

Mächler (2014) reports on accurate computation of \tau_\mathbf{C} and \rho_\mathbf{C} for this copula for conditions of \Theta \rightarrow 0 and in particular derives the following equation, which does not have \Theta in the denominator:

\rho_\mathbf{C} = \sum_{k=1}^{\infty} \frac{3\Theta^k}{{k+2 \choose 2}^2}\mbox{.}

The copula package provides a Taylor series expansion for \tau_\mathbf{C} for small \Theta in the copula::tauAMH(). This is demonstrated here between the implementation of \tau = 0 for parameter estimation in the copBasic package to that in the more sophisticated implementation in the copula package.

copula::tauAMH(AMHcop(tau=0)$para) # theta = -2.313076e-07

It is seen that the numerical approaches yield quite similar results for small \tau_\mathbf{C}, and finally, a comparison to the \rho_\mathbf{C} is informative:

rhoCOP(AMHcop, para=1E-9) # 3.333333e-10 (two nested integrations) copula:::.rhoAmhCopula(1E-9) # 3.333333e-10 (cutoff based) theta <- seq(-1,1, by=.0001) RHOa <- sapply(theta, function(t) rhoCOP(AMHcop, para=t)) RHOb <- sapply(theta, function(t) copula:::.rhoAmhCopula(t)) plot(10^theta, RHOa-RHOb, type="l", col=2)

The plot shows that the apparent differences are less than 1 part in 100 million—The copBasic computation is radically slower though, but rhoCOP was designed for generality of copula family.

Author(s)

W.H. Asquith

References

Joe, H., 2014, Dependence modeling with copulas: Boca Raton, CRC Press, 462 p.

Mächler, Martin, 2014, Spearman's Rho for the AMH copula—A beautiful formula: copula package vignette, accessed on April 7, 2018, at https://CRAN.R-project.org/package=copula under the vignette rhoAMH-dilog.pdf.

Nelsen, R.B., 2006, An introduction to copulas: New York, Springer, 269 p.

Pranesh, Kumar, 2010, Probability distributions and estimation of Ali–Mikhail–Haq copula: Applied Mathematical Sciences, v. 4, no. 14, p. 657–666.

See Also

Examples

## Not run:

t <- 0.9 # The Theta of the copula and we will compute Spearman Rho.

di <- integrate(function(t) log(t)/(1-t), lower=1, upper=(1-t))$value

A <- di*(1+t) - 2*log(1-t) + 2*t*log(1-t) - 3*t # Nelsen (2007, p. 172)

rho <- 12*A/t^2 - 3 # 0.4070369

rhoCOP(AMHcop, para=t) # 0.4070369

sum(sapply(1:100, function(k) 3*t^k/choose(k+2, 2)^2)) # Machler (2014)

# 0.4070369 (see Note section, very many tens of terms are needed)

## End(Not run)

## Not run:

layout(matrix(1:2, byrow=TRUE)) # Note: Kendall Tau is same on reversal.

s <- 2; set.seed(s); nsim <- 10000

UVn <- simCOP(nsim, cop=AMHcop, para=c(-0.9, "FALSE" ), col=4)

mtext("Normal definition [default]") # '2nd' parameter could be skipped

set.seed(s) # seed used to keep Rho/Tau inside attainable limits

UVr <- simCOP(nsim, cop=AMHcop, para=c(-0.9, "TRUE"), col=2)

mtext("Reversed definition")

AMHcop(UVn[,1], UVn[,2], fit="rho")$rho # -0.2581653

AMHcop(UVr[,1], UVr[,2], fit="rho")$rho # -0.2570689

rhoCOP(cop=AMHcop, para=-0.9) # -0.2483124

AMHcop(UVn[,1], UVn[,2], fit="tau")$tau # -0.1731904

AMHcop(UVr[,1], UVr[,2], fit="tau")$tau # -0.1724820

tauCOP(cop=AMHcop, para=-0.9) # -0.1663313

## End(Not run)

Copula of Circular Uniform Distribution

Description

The Circular copula of the coordinates (x, y) of a point chosen at random on the unit circle (Nelsen, 2006, p. 56) is given by

\mathbf{C}_{\mathrm{CIRC}}(u,v) = \mathbf{M}(u,v) \mathrm{\ for\ }|u-v| > 1/2\mathrm{,}

\mathbf{C}_{\mathrm{CIRC}}(u,v) = \mathbf{W}(u,v) \mathrm{\ for\ }|u+v-1| > 1/2\mathrm{,\ and}

\mathbf{C}_{\mathrm{CIRC}}(u,v) = \frac{u+v}{2} - \frac{1}{4} \mathrm{\ otherwise\ }\mathrm{.}

The coordinates of the unit circle are given by

\mathbf{CIRC}(x,y) = \biggl(\frac{\mathrm{cos}\bigl(\pi(u-1)\bigr)+1}{2}, \frac{\mathrm{cos}\bigl(\pi(v-1)\bigr)+1}{2}\biggr)\mathrm{.}

Usage

CIRCcop(u, v, para=NULL, as.circ=FALSE, ...)

Arguments

u |

Nonexceedance probability |

v |

Nonexceedance probability |

para |

Optional parameter list argument that can contain the logical |

as.circ |

A logical, if true, to trigger the transformation |

... |

Additional arguments to pass, if ever needed. |

Value

Value(s) for the copula are returned.

Author(s)

W.H. Asquith

References

Nelsen, R.B., 2006, An introduction to copulas: New York, Springer, 269 p.

Examples

CIRCcop(0.5, 0.5) # 0.25 quarterway along the diagonal upward to right

CIRCcop(0.5, 1 ) # 0.50 halfway across in horizontal direction

CIRCcop(1 , 0.5) # 0.50 halfway across in vertical direction

## Not run:

nsim <- 2000

opts <- par(xpd=NA, las=1, lend=2, no.readonly=TRUE)

rtheta <- runif(nsim, min=0, max=2*pi) # polar coordinate simulation

XY <- data.frame(X=cos(rtheta)/2 + 1/2, Y=sin(rtheta)/2 + 1/2)

plot(XY, lwd=0.8, col="lightgreen", xaxs="i", yaxs="i",

xlab="X OF UNIT CIRCLE OR NONEXCEEDANCE PROBABILITY U",

ylab="Y OF UNIT CIRCLE OR NONEXCEEDANCE PROBABILITY V")

UV <- simCOP(nsim, cop=CIRCcop, lwd=0.8, col="salmon3", ploton=FALSE)

theta <- 3/4*pi+0.1 # select a point on the upper left of the circle

x <- cos(theta)/2 + 1/2; y <- sin(theta)/2 + 1/2 # coordinates

H <- CIRCcop(x, y, as.circ=TRUE) # 0.218169 # Pr[X <= x & Y <= y]

points(x, y, pch=16, col="forestgreen", cex=2)

segments(0, y, x, y, lty=2, lwd=2, col="forestgreen")

segments(x, 0, x, y, lty=2, lwd=2, col="forestgreen")

Hemp1 <- sum(XY$X <= x & XY$Y <= y) / nrow(XY) # about 0.22 as expected

u <- 1-acos(2*x-1)/pi; v <- 1-acos(2*y-1)/pi

segments(0, v, u, v, lty=2, lwd=2, col="salmon3")

segments(u, 0, u, v, lty=2, lwd=2, col="salmon3")

points(u, v, pch=16, cex=2, col="salmon3")

arrows(x, y, u, v, code=2, lwd=2, angle=15) # arrow points from (X,Y) coordinate

# specified by angle theta in radians on the unit circle to the corresponding

# coordinate in (U,V) domain of uniform circular distribution copula

Hemp2 <- sum(UV$U <= u & UV$V <= v) / nrow(UV) # about 0.22 as expected

# Hemp1 and Hemp2 are about equal to each other and converge as nsim

# gets very large, but the origin of the simulations to get to each

# are different: (1) one in polar coordinates and (2) by copula.

# Now, draw the level curve for the empirical Hs and as nsim gets large the two

# lines will increasingly plot on top of each other.

lshemp1 <- level.setCOP(cop=CIRCcop, getlevel=Hemp1, lines=TRUE, col="blue", lwd=2)

lshemp2 <- level.setCOP(cop=CIRCcop, getlevel=Hemp2, lines=TRUE, col="blue", lwd=2)

txt <- paste0("level curves for Pr[X <= x & Y <= y] and\n",

"level curves for Pr[U <= u & V <= v],\n",

"which equal each other as nsim gets large")

text(0.52, 0.52, txt, srt=-46, col="blue"); par(opts) #

## End(Not run)

## Not run:

# Nelsen (2007, ex. 3.2, p. 57) # Singular bivariate distribution with

# standard normal margins that is not bivariate normal.

U <- runif(500); V <- simCOPmicro(U, cop=CIRCcop)

X <- qnorm(U, mean=0, sd=1); Y <- qnorm(V, mean=0, sd=1)

plot(X,Y, main="Nelsen (2007, ex. 3.2, p. 57)", xlim=c(-4,4), ylim=c(-4,4),

lwd=0.8, col="turquoise3")

rug(X, side=1, col="salmon3", tcl=0.5)

rug(Y, side=2, col="salmon3", tcl=0.5) #

## End(Not run)

## Not run:

DX <- c(5, 5, -5, -5); DY <- c(5, 5, -5, -5); D <- 6; R <- D/2

xylim <- c(-10, 10)

plot(DX, DY, type="n", xlim=xylim, ylim=xylim, xlab="X", ylab="Y", las=1,

xaxs="i", yaxs="i")

for(i in seq_len(length(DX))) lines(rep(DX[i], 2), xylim, lwd=2, col="seagreen")

for(i in seq_len(length(DY))) lines(xylim, rep(DY[i], 2), lwd=2, col="seagreen")

for(i in seq_len(length(DX))) {

for(j in seq_len(length(DY))) {

UV <- simCOP(n=30, cop=CIRCcop, pch=16, col="darkgreen", cex=0.5, graphics=FALSE)

points(UV[,1]-0.5, UV[,2]-0.5, pch=16, col="darkgreen", cex=0.5)

XY <- data.frame(X=DX[i]+sign(DX[i])*D*(cos(pi*(UV$U-1))+1)/2-sign(DX[i])*R,

Y=DY[j]+sign(DY[j])*D*(cos(pi*(UV$V-1))+1)/2-sign(DY[j])*R)

points(XY, lwd=0.8, col="darkgreen")

}

lines(rep(DX[i]+R, 2), xylim, lty=2, col="seagreen")

lines(rep(DX[i]-R, 2), xylim, lty=2, col="seagreen")

lines(xylim, rep(DY[i]+R, 2), lty=2, col="seagreen")

lines(xylim, rep(DY[i]-R, 2), lty=2, col="seagreen")

} #

## End(Not run)

## Not run:

para <- list(cop1=CIRCcop, para1=NULL, cop2=W, para2=NULL, alpha=0.8, beta=0.8)

UV <- simCOP(n=2000, col="salmon3", cop=composite2COP, para=para)

XY <- data.frame(X=(cos(pi*(UV$U-1))+1)/2, Y=(cos(pi*(UV$V-1))+1)/2)

plot(XY, type="n", xlab=paste0("X OF CIRCULAR UNIFORM DISTRIBUTION OR\n",

"NONEXCEEDANCE PROBABILITY OF U"),

ylab=paste0("Y OF CIRCULAR UNIFORM DISTRIBUTION OR\n",

"NONEXCEEDANCE PROBABILITY OF V"))

JK <- data.frame(U=1 - acos(2*XY$X - 1)/pi, V=1 - acos(2*XY$Y - 1)/pi)

segments(x0=UV$U, y0=UV$V, x1=XY$X, y1=XY$Y, col="lightgreen", lwd=0.8)

points(XY, lwd=0.8, col="darkgreen")

points(JK, pch=16, col="salmon3", cex=0.5)

t <- seq(0.001, 0.999, by=0.001)

t <- diagCOPatf(t, cop=composite2COP, para=para)

AB <- data.frame(X=(cos(pi*(t-1))+1)/2, Y=(cos(pi*(t-1))+1)/2)

lines(AB, lwd=4, col="seagreen") #

## End(Not run)

The Clayton Copula

Description

The Clayton copula (Joe, 2014, p. 168) is

\mathbf{C}_{\Theta}(u,v) = \mathbf{CL}(u,v) = \mathrm{max}\bigl[(u^{-\Theta}+v^{-\Theta}-1; 0)\bigr]^{-1/\Theta}\mbox{,}

where \Theta \in [-1,\infty), \Theta \ne 0. The copula, as \Theta \rightarrow -1^{+} limits, to the countermonotonicity coupla (\mathbf{W}(u,v); W), as \Theta \rightarrow 0 limits to the independence copula (\mathbf{\Pi}(u,v); P), and as \Theta \rightarrow \infty, limits to the comonotonicity copula (\mathbf{M}(u,v); M). The parameter \Theta is readily computed from a Kendall Tau (tauCOP) by \tau_\mathbf{C} = \Theta/(\Theta+2).

Usage

CLcop(u, v, para=NULL, tau=NULL, ...)

Arguments

u |

Nonexceedance probability |

v |

Nonexceedance probability |

para |

A vector (single element) of parameters—the |

tau |

Optional Kendall Tau; and |

... |

Additional arguments to pass. |

Value

Value(s) for the copula are returned. Otherwise if tau is given, then the \Theta is computed and a list having

para |

The parameter |

tau |

Kendall Tau. |

and if para=NULL and tau=NULL, then the values within u and v are used to compute Kendall Tau and then compute the parameter, and these are returned in the aforementioned list.

Author(s)

W.H. Asquith

References

Joe, H., 2014, Dependence modeling with copulas: Boca Raton, CRC Press, 462 p.

See Also

Examples

# Lower tail dependency of Theta = pi --> 2^(-1/pi) = 0.8020089 (Joe, 2014, p. 168)

taildepCOP(cop=CLcop, para=pi)$lambdaL # 0.80201

The Copula

Description

Compute the copula or joint distribution function through a copula as shown by Nelsen (2006, p. 18) is the joint probability

\mathrm{Pr}[U \le u, V \le v] = \mathbf{C}(u,v)\mbox{.}

The copula is an expression of the joint probability that both U \le u and V \le v.

A copula is a type of dependence function that permits straightforward characterization of dependence from independence. Joe (2014, p. 8) comments that “copula families are usually given as cdfs [cumulative distribution functions.]” A radially symmetric or permutation symmetric copula is one such that \mathbf{C}(u,v) = \mathbf{C}(v,u) otherwise the copula is asymmetric.

The copula inversions t = \mathbf{C}(u{=}U, v) or t = \mathbf{C}(u, v{=}V) for a given t and U or V are provided by COPinv and COPinv2, respectively. A copula exists in the domain of the unit square (\mathcal{I}^2 = [0, 1]\times [0,1]) and is a grounded function meaning that

\mathbf{C}(u,0) = 0 = \mathbf{C}(0,v) \mbox{\ and\ thus\ } \mathbf{C}(0,0) = 0\mbox{, }

and other properties of a copula are that

\mathbf{C}(u,1) = u \mbox{\ and\ } \mathbf{C}(1,v) = v\mbox{\ and}

\mathbf{C}(1,1) = 1\mbox{.}

Copulas can be combined with each other (convexCOP, convex2COP, composite1COP,

composite2COP, composite3COP, and glueCOP) to form more complex and sophisticated dependence structures. Also copula multiplication—a special product of two copulas—yields another copula (see prod2COP).

Perhaps the one of the more useful features of this function is that in practical applications it can be used to take a copula formula and reflect or rotated it in fashions to attain association structures that the native definition of the copula can not acquire. The terminal demonstration in the Examples demonstrates this for the Raftery copula (RFcop).

Usage

COP(u, v, cop=NULL, para=NULL,

reflect=c("cop", "surv", "acute", "grave",

"1", "2", "3", "4"), ...)

Arguments

u |

Nonexceedance probability |

v |

Nonexceedance probability |

cop |

A copula function with vectorization as in |

para |

Vector of parameters or other data structures, if needed, to pass to the copula; |

reflect |

The reflection of the copula form (see Note) and the default |

... |

Additional arguments to pass to the copula. |

Value

Value(s) for the copula are returned.

Note

REFLECTIONS OF VARIABLES (ROTATIONS OF THE COPULA)—The copula of (1-U, 1-V) is the survival copula (\hat{\mathbf{C}}(u,v); surCOP) and is defined as

\mathrm{Pr}[\,U > u, V > v\,] = \hat{\mathbf{C}}(u,v) = u + v - 1 + \mathbf{C}(1-u,1-v)\:\rightarrow\mbox{\ \code{"surv"},}

whereas, following the notation of Joe (2014, p. 271), the copula of (1-U, V) is defined as

\mathrm{Pr}[\,U > u, V \le v\,] = \acute{\mathbf{C}}(u,v) = v - \mathbf{C}(1-u,v)\:\rightarrow\mbox{\ \code{"acute"}, and}

the copula of (U, 1-V) is defined as

\mathrm{Pr}[\,U \le u, V > v\,] = \grave{\mathbf{C}}(u,v) = u - \mathbf{C}(u,1-v)\:\rightarrow\mbox{\ \code{"grave"}.}

Here it is useful to stress the probability aspects that change with the reflections, but this section ends with the reflections themselves being graphically highlighted. The Examples stress simple variations on the probability aspects.

To clarify the seemingly clunky nomenclature—Joe (2014) does not provide “names” for \acute{\mathbf{C}}(u,v) or \grave{\mathbf{C}}(u,v)—the following guidance is informative where the numbers in the list align to those for the reflect argument:

\mbox{}\quad\mbox{}(2) "surv" or \hat{\mathbf{C}}(u,v) is a reflection of U and V on the horizontal and vertical axes, respectively,

\mbox{}\quad\mbox{}(3) "acute" or \acute{\mathbf{C}}(u,v) is a reflection of U on the horizontal axis, and

\mbox{}\quad\mbox{}(4) "grave" or \grave{\mathbf{C}}(u,v) is a reflection of V on the verical axis.

The names "acute" and "grave" match those used in the Rd-format math typesetting instructions. Users are directed to the documentation of simCOPmicro for further discussion because the COP function is expected to be an early entry point for new users interested in the copBasic API.

For the copBasic package and in order to keep some logic brief and code accessible for teaching and applied circumstances, reflections of copulas using analogs to the reflect argument are only natively supported in the COP and simCOPmicro functions. The interfaces of copBasic should already be flexible enough for users to adapt and (or) specially name reflections of copulas for deployment. A caveat is that some individual copula implementations might have some self-supporting infrastructure. The reflection can also be set within the para argument when it is a list (see Examples).

An example is warranted. Although the Gumbel–Hougaard copula (GHcop) can be reflected by COP and simCOPmicro and testing is made in the Note section of simCOPmicro, it is suggested that a user generally requiring say a horizontal reflection ru (or vertical reflection rv) of the Gumbel–Hougaard copula write a function named perhaps ruGHcop (or rvGHcop).

Such functions, consistent with the mathematics at the beginning of this Note, can be used throughout functions of copBasic using the cop arguments. The author (Asquith) eschews implementing what is perceived as too much flexibility and overhead for the package to support the three reflection permutations universally across all copula functions of the package. This being said, COP can take an R list for the para argument for rotation/reflection:

set.seed(14)

UV3 <- simCOP(20, cop=COP, pch=16, col=3,

para=list(cop=GLcop, para=pi+1, reflect="3"))

set.seed(14)

UV2 <- simCOP(20, cop=COP, pch=16, col=4, ploton=FALSE,

para=list(cop=GLcop, para=pi+1, reflect="2"))

arrows(x0=UV3[,1], y0=UV3[,2], x=UV2[,1], y=UV2[,2])

and this type of interface is similar to composite1COP as the following rotation and then asymmetric construction shows:

UV <- simCOP(1000, cop=composite1COP,

para=list(cop1=COP,

para1=c(cop=GHcop, para=pi+1, reflect="4"),

alpha=0.1, beta=0.3))

Author(s)

W.H. Asquith

References

Joe, H., 2014, Dependence modeling with copulas: Boca Raton, CRC Press, 462 p.

Nelsen, R.B., 2006, An introduction to copulas: New York, Springer, 269 p.

See Also

coCOP, duCOP, surCOP, surfuncCOP

Examples

u <- runif(1); v <- runif(1)

COP(cop=W,u,v); COP(cop=P,u,v); COP(cop=M,u,v); COP(cop=PSP,u,v)

FF <- 0.75 # 75th percentile, nonexceedance

GG <- 0.20 # 25th percentile, nonexceedance

bF <- 1 - FF; bG <- 1 - GG # exceedance

# What is the probability that both X and Y are less than

# 75th and 20th percentiles, respectively?

COP(cop=P, FF, GG) # 0.15

# What is the probability that both X and Y are greater than

# 75th and 20th percentiles, respectively?

surCOP(cop=P, bF, bG) # 0.20

# What is the probability that either X or Y are less than

# the 75th and 20th percentiles, respectively?

duCOP(cop=P, FF, GG) # 0.8

# What is the probability that either X or Y are greater than

# the 75th and 20th percentiles, respectively?

coCOP(cop=P, bF, bG) # 0.85

# Repeat for the PSP copula:

# What is the probability that both X and Y are less than

# 75th and 20th percentiles, respectively?

COP(cop=PSP, FF, GG) # 0.1875

# What is the probability that both X and Y are greater than

# 75th and 20th percentiles, respectively?

surCOP(cop=PSP, bF, bG) # 0.2375

# What is the probability that either X or Y are less than

# the 75th and 20th percentiles, respectively?

duCOP(cop=PSP, FF, GG) # 0.7625

# What is the probability that either X or Y are greater than

# the 75th and 20th percentiles, respectively?

coCOP(cop=PSP, bF, bG) # 0.8125

# Both of these summations equal unity

COP(cop=PSP, FF, GG) + coCOP(cop=PSP, bF, bG) # 1

surCOP(cop=PSP, bF, bG) + duCOP(cop=PSP, FF, GG) # 1

FF <- 0.99 # 99th percentile, nonexceedance

GG <- 0.50 # 50th percentile, nonexceedance

bF <- 1 - FF # nonexceedance

bG <- 1 - GG # nonexceedance

# What is the probability that both X and Y are less than

# 99th and 50th percentiles, respectively?

COP(cop=P, FF, GG) # 0.495

# What is the probability that both X and Y are greater than

# 99th and 50th percentiles, respectively?

surCOP(cop=P, bF, bG) # 0.005

# What is the probability that either X or Y are less than

# the 99th and 50th percentiles, respectively?

duCOP(cop=P, FF, GG) # 0.995

# What is the probability that either X or Y are greater than

# the 99th and 50th percentiles, respectively?

coCOP(cop=P, bF, bG) # 0.505

## Not run:

# MAJOR EXAMPLE FOR QUICKLY MODIFYING INHERENT ASSOCIATION STRUCTURES

p <- 0.5 # Reasonable strong positive association for the Raftery copula

"RFcop1" <- function(u,v, para) COP(u,v, cop=RFcop, para=para, reflect="1")

"RFcop2" <- function(u,v, para) COP(u,v, cop=RFcop, para=para, reflect="2")

"RFcop3" <- function(u,v, para) COP(u,v, cop=RFcop, para=para, reflect="3")

"RFcop4" <- function(u,v, para) COP(u,v, cop=RFcop, para=para, reflect="4")

d <- 0.01 # Just to speed up the density plots a bit

densityCOPplot(RFcop1, para=p, contour.col=1, deluv=d) # Raftery in the literature

densityCOPplot(RFcop2, para=p, contour.col=1, deluv=d, ploton=FALSE)

densityCOPplot(RFcop3, para=p, contour.col=1, deluv=d, ploton=FALSE)

densityCOPplot(RFcop4, para=p, contour.col=1, deluv=d, ploton=FALSE)

# Now some text into the converging tail to show the reflection used.

text(-2,-2, "reflect=1", col=2); text(+2,+2, "reflect=2", col=2)

text(+2,-2, "reflect=3", col=2); text(-2,+2, "reflect=4", col=2) #

## End(Not run)

## Not run:

# ALTERNATIVE EXAMPLE FOR QUICKLY MODIFYING INHERENT ASSOCIATION STRUCTURES

# To show how the reflection can be alternatively specified and avoid in this case

# making four Raftery functions, pass by a list para argument. Also, demonstrate

# that cop1 --> cop and para1 --> para are the same in use of the function. This

# provides some nomenclature parallel to the other compositing functions.

densityCOPplot(COP, para=list(reflect=1, cop1=RFcop, para=p ), deluv=d,

contour.col=1, drawlabels=FALSE)

densityCOPplot(COP, para=list(reflect=2, cop= RFcop, para1=p), deluv=d,

contour.col=2, drawlabels=FALSE, ploton=FALSE)

densityCOPplot(COP, para=list(reflect=3, cop1=RFcop, para1=p), deluv=d,

contour.col=3, drawlabels=FALSE, ploton=FALSE)

densityCOPplot(COP, para=list(reflect=4, cop= RFcop, para=p ), deluv=d,

contour.col=4, drawlabels=FALSE, ploton=FALSE)

# Now some text into the converging tail to show the reflection used.

text(-2,-2, "reflect=1", col=2); text(+2,+2, "reflect=2", col=2)

text(+2,-2, "reflect=3", col=2); text(-2,+2, "reflect=4", col=2) #

## End(Not run)

## Not run:

# Similar example to previous, but COP() can handle the reflection within a

# parameter list ,and the reflect, being numeric here, is converted to

# character internally.

T12 <- CLcop(tau=0.67)$para # Kendall Tau of 0.67

T12 <- list(cop=CLcop, para=T12, reflect=2) # reflected to upper tail dependency

UV <- simCOP(n=1000, cop=COP, para=T12) #

## End(Not run)

The Inverse of a Copula for V with respect to U

Description

Compute the inverse of a copula for V with respect to U given t or

t = \mathbf{C}(u{=}U,v) \rightarrow \mathbf{C}^{(-1)}(u{=}U, t) = v\mbox{,}

and solving for v. Nelsen (2006, p. 12) does not so name this function as an “inverse.” The COPinv function is internally used by level.curvesCOP and level.curvesCOP2. A common misapplication that will puzzle the user (including the developer after long breaks from package use) is that the following call and error message are often seen, if silent=FALSE:

COPinv(0.2, 0.25, cop=PSP) # Error in uniroot(func, interval = c(lo, 1), u = u, LHS = t, cop = cop, : # f() values at end points not of opposite sign # [1] NA

This is a harmless error in the sense that COPinv is functioning properly. One can not invert a copula for u < t and for u = t the v = 1 because of fundamental copula properties.

Usage

COPinv(cop=NULL, u, t, para=NULL, silent=TRUE, ...)

Arguments

cop |

A copula function; |

u |

Nonexceedance probability |

t |

Nonexceedance probability level |

para |

Vector of parameters or other data structures, if needed, to pass to the copula; |

silent |

The argument of the same name given over to |

... |

Additional arguments to pass. |

Value

Value(s) for v are returned.

Author(s)

W.H. Asquith

References

Nelsen, R.B., 2006, An introduction to copulas: New York, Springer, 269 p.

See Also

COP,

COPinv2,

level.curvesCOP,

level.curvesCOP2

Examples

COPinv(cop=N4212cop, para=2, u=0.5, t=0.2)

The Inverse of a Copula for U with respect to V

Description

Compute the inverse of a copula for U with respect to V given t or

t = \mathbf{C}(u,v{=}V) \rightarrow \mathbf{C}^{(-1)}(v{=}V, t) = u\mbox{,}

and solving for u. Nelsen (2006, p. 12) does not so name this function as an “inverse.” The COPinv2 function is internally used by level.curvesCOP2. A common misapplication that will puzzle the user (including the developer after long breaks from package use) is that the following call and error message are often seen, if silent=FALSE:

COPinv2(0.2, 0.25, cop=PSP) # Error in uniroot(func, interval = c(lo, 1), u = u, LHS = t, cop = cop, : # f() values at end points not of opposite sign # [1] NA

This is a harmless error in the sense that COPinv2 is functioning properly. One can not invert a copula for v < t and for v = t the u = 1 because of fundamental copula properties.

Usage

COPinv2(cop=NULL, v, t, para=NULL, silent=TRUE, ...)

Arguments

cop |

A copula function; |

v |

Nonexceedance probability |

t |

Nonexceedance probability in |

para |

Vector of parameters or other data structures, if needed, to pass to the copula; |

silent |

The argument of the same name given over to |

... |

Additional arguments to pass to the copula. |

Value

Value(s) for u are returned.

Author(s)

W.H. Asquith

References

Nelsen, R.B., 2006, An introduction to copulas: New York, Springer, 269 p.

See Also

COP, COPinv, level.curvesCOP, level.curvesCOP2

Examples

# See those for COPinv as they are the same by analogy.

The Bivariate Empirical Copula

Description

The bivariate empirical copula (Nelsen, 2006, p. 219) for a bivariate sample of length n is defined for random variables X and Y as

\mathbf{C}_n\biggl(\frac{i}{n}, \frac{j}{n}\biggr) = \frac{\mathrm{number\ of\ pairs\ (}x,y\mathrm{)\ with\ }x \le x_{(i)}\mathrm{\ and\ }y \le y_{(j)}}{n}\mbox{,}

where x_{(i)} and y_{(i)}, 1 \le i,j \le n or expressed as

\mathbf{C}_n\biggl(\frac{i}{n}, \frac{j}{n}\biggr) =

\frac{1}{n}\sum_{i=1}^n \mathbf{1}\biggl(\frac{R_i}{n} \le u_i, \frac{S_i}{n} \le v_i \biggr)\mbox{,}

where R_i and S_i are ranks of the data for U and V, and \mathbf{1}(.) is an indicator function that score 1 if condition is true otherwise scoring zero. Using more generic notation, the empirical copula can be defined by

\mathbf{C}_{n}(u,v) =

\frac{1}{n}\sum_{i=1}^n \mathbf{1}\bigl(u^\mathrm{obs}_{i} \le u_i, v^\mathrm{obs}_{i} \le v_i \bigr)\mbox{,}

where u^\mathrm{obs} and v^\mathrm{obs} are thus some type of nonparametric nonexceedance probabilities based on counts of the underlying data expressed in probabilities.

Hazen Empirical Copula—The “Hazen form” of the empirical copula is

\mathbf{C}^\mathcal{H}_{n}(u,v) =

\frac{1}{n}\sum_{i=1}^n \mathbf{1}\biggl(\frac{R_i - 0.5}{n} \le u_i, \frac{S_i - 0.5}{n} \le v_i \biggr)\mbox{,}

which can be triggered by ctype="hazen". This form is named for this package because of direct similarity of the Hazen plotting position to the above definition. Joe (2014, pp. 247–248) uses the Hazen form. Joe continues by saying “[the] adjustment of the uniform score [(R - 0.5)/n]] could be done in an alternative form, but there is [asymptotic] equivalence[, and that] \mathbf{C}^\mathcal{H}_{n} puts mass of n^{-1} at the tuples ([r_{i1} - 0.5]/n, \ldots, [r_{id} - 0.5]/n) for i = 1, \ldots, n.” A footnote by Joe (2014) says that “the conversion [R/(n+1)] is commonly used for the empirical copula.” This later form is the “Weibull form” described next. Joe's preference for the Hazen form is so that the sum of squared normal scores is closer to unity for large n than such a sum would be attained using the Weibull form.

Weibull Empirical Copula—The “Weibull form” of the empirical copula is

\mathbf{C}^\mathcal{W}_{n}(u,v) =

\frac{1}{n}\sum_{i=1}^n \mathbf{1}\biggl(\frac{R_i}{n+1} \le u_i, \frac{S_i}{n+1} \le v_i \biggr)\mbox{,}

which can be triggered by ctype="weibull". This form is named for this package because of direct similarity of the Weibull plotting position to the definition, and this form is the default (see argument description).

Bernstein Empirical Copula—The empirical copula can be extended nonparametrically as the Bernstein empirical copula (Hernández-Maldonado, Díaz-Viera, and Erdely, 2012) and is formulated as

\mathbf{C}^\mathcal{B}_n(u,v; \eta) = \sum_{i=1}^n\sum_{j=1}^n \mathbf{C}_{n}\biggl(\frac{i}{n},\frac{j}{n}\biggr) \times \eta(i,j; u,v)\mbox{,}

where the individual Bernstein weights \eta(i,j) for the kth paired value of the u and v vectors are

\eta(i,j; u,v) = {n \choose i} u^i (1-u)^{n-i} \times {n \choose j} u^j (1-u)^{n-j}\mbox{.}

The Bernstein extension, albeit conceptually pure in its shuffling by binomial coefficients and left- and right-tail weightings, is quite CPU intensive as inspection of the equations above indicates a nest of four for() loops in R. (The native R code of copBasic uses the sapply() function in R liberally for substantial but not a blazing fast speed increase.) The Bernstein extension results in a smoother surface of the empirical copula and can be triggered by ctype="bernstein". Remark, Hernández-Maldonado and others (2024) provide a fast algorithm for the Bernstein smoothing—this is not yet implemented in copBasic.

Checkerboard Empirical Copula—A simple smoothing to the empirical copula is the checkerboard empirical copula (Segers et al., 2017) that has been adapted from the copula package. It is numerically intensive like the Bernstein and possibly of limited usefulness for large sample sizes. The checkerboard extension can be triggered by ctype="checkerboard" and is formulated as

\mathbf{C}^\sharp_{n}(U) = \frac{1}{n+o} \sum_{i=1}^n\prod_{i=1}^d \mathrm{min}[\mathrm{max}[n U_j - R^{(n)}_{i,j} + 1,0],1]\mathrm{,}

where U is a d=2 column matrix of u and v, R is a rank function, and o is an offset term on [0,1].

The empirical copula frequency can be defined (Nelson, 2006, p. 219) as

\mathbf{c}_n(u, v) = \mathbf{C}_n\biggl(\frac{i}{n}, \frac{j}{n}\biggr) -

\mathbf{C}_n\biggl(\frac{i-1}{n}, \frac{j}{n}\biggr) -

\mathbf{C}_n\biggl(\frac{i}{n}, \frac{j-1}{n}\biggr) +

\mathbf{C}_n\biggl(\frac{i-i}{n}, \frac{j-1}{n}\biggr)\mbox{.}

Usage

EMPIRcop(u, v, para=NULL,

ctype=c("weibull", "hazen", "1/n", "bernstein", "checkerboard"),

bernprogress=FALSE, checkerboard.offset=0, ...)

Arguments

u |

Nonexceedance probability |

v |

Nonexceedance probability |

para |

A vector (single element) of parameters—the U-statistics of the data (see Examples). Alternatively, |

ctype |

An alternative means for trigging the definition of |

bernprogress |

The Bernstein copula extension is CPU intensive(!), so a splash counter is pushed to the console via the |

checkerboard.offset |

A scaling of the ratio |

... |

Additional arguments to pass. |

Value

Value(s) for the copula are returned.

Note

Not all theoretical measures of copula dependence (both measures of association and measures of asymmetry), which use numerical integration by the integrate() function in R, can be used for all empirical copulas because of “divergent” integral errors; however, examples using Hoeffding Phi (\Phi_\mathbf{C}; hoefCOP) and shown under Examples. Other measures of copula dependence include blomCOP, footCOP, giniCOP, rhoCOP, tauCOP, wolfCOP, joeskewCOP, and uvlmoms. Each of these measures fortunately has a built-in sample estimator.

It is important to distinquish between a sample estimator and the estimation of the measure using the empirical copula itself via the EMPIRcop function. The sample estimators (triggered by the as.sample arguments for the measures) are reasonably fast and numerically preferred over using the empirical copula. Further, the generally slow numerical integrations for the theoretical definitions of these copula measures might have difficulties. Limited testing, however, suggests prevalence of numerical integration not erroring using the Bernstein extension of the empirical copula, which must be a by-product of the enhanced and sufficient smoothness for the R default numerical integration to succeed. Many of the measures have brute option for a brute-force numerical integration on a regular grid across the empirical copula—these are slow but should not trigger errors. As a general rule, users should still use the sample estimators instead.

Author(s)

W.H. Asquith

References

Hernández-Maldonado, V.M., Díaz-Viera, M., and Erdely, A., 2012, A joint stochastic simulation method using the Bernstein copula as a flexible tool for modeling nonlinear dependence structures between petrophysical properties: Journal of Petroleum Science and Engineering, v. 90–91, pp. 112–123, doi:10.1016/j.petrol.2012.04.018.

Hernández-Maldonado, V.M., Erdely, A., Díaz-Viera, M., and Rios, L., 2024, Fast procedure to compute empirical and Bernstein copulas: Applied Mathematics and Computation, v. 477, article 128827, 14 p., doi:10.1016/j.amc.2024.128827.

Nelsen, R.B., 2006, An introduction to copulas: New York, Springer, 269 p.

Salvadori, G., De Michele, C., Kottegoda, N.T., and Rosso, R., 2007, Extremes in Nature—An approach using copulas: Springer, 289 p.

Segers, J., Sibuya, M., and Tsukahara, H., 2017, The empirical beta copula: Journal of Multivariate Analysis, v. 155, pp. 35–51, doi:10.1016/j.jmva.2016.11.010.

See Also

diagCOP, level.curvesCOP, simCOP

Examples

## Not run:

set.seed(62)

EMPIRcop(0.321,0.78, para=simCOP(n=90, cop=N4212cop,

para=2.32, graphics=FALSE)) # [1] 0.3222222

N4212cop(0.321,0.78, para=2.32) # [1] 0.3201281

## End(Not run)

## Not run:

set.seed(62) # See note below about another seed to try.

psp <- simCOP(n=34, cop=PSP, ploton=FALSE, points=FALSE) * 150

# Pretend psp is real data, the * 150 is to clearly get into an arbitrary unit system.

# The sort=FALSE is critical in the following two calls. Although the Weibull

# plotting positions are chosen, internally EMPIRcop uses ranks, but the model

# here is to imagine one having a sample in native units of the random variables

# and then casting them into probabilities for other purposes.

fakeU <- lmomco::pp(psp[,1], sort=FALSE) # Weibull plotting position i/(n+1)

fakeV <- lmomco::pp(psp[,2], sort=FALSE) # Weibull plotting position i/(n+1)

uv <- data.frame(U=fakeU, V=fakeV); # our U-statistics

# The next four values should be very close if n above were say 1000, but the

# ctype="bernstein"" should not be used if n >> 34 because of inherently long runtime.

PSP(0.4,0.6) # 0.3157895 (compare to listed values below)

# Two seeds are shown so that the user can see that depending on the distribution

# of the values given by para that different coincidences of which method is

# equivalent to another exist.

# For set.seed(62) --- "hazen" == "weibull" by coincidence

# "hazen" --> 0.3529412

# "weibull" --> 0.3529412

# "1/n" --> 0.3235294

# "bernstein" --> 0.3228916

# For set.seed(85) --- "1/n" == "hazen" by coincidence

# "hazen" --> 0.3529412

# "weibull" --> 0.3823529

# "1/n" --> 0.3529412

# "bernstein" --> 0.3440387

# For set.seed(62) --- not all measures of association can be used for all

# empirical copulas because of 'divergent' integral errors, but this is an example

# for Hoeffding Phi. These computations are CPU intensive, esp. Bernstein.

hoefCOP(as.sample=TRUE, para=uv) # (sample estimator is fast) # 0.4987755

hoefCOP(cop=EMPIRcop, para=uv, ctype="hazen") # 0.5035348

hoefCOP(cop=EMPIRcop, para=uv, ctype="weibull") # 0.4977145

hoefCOP(cop=EMPIRcop, para=uv, ctype="1/n") # 0.4003646

hoefCOP(cop=EMPIRcop, para=uv, ctype="bernstein") # 0.4563724

hoefCOP(cop=EMPIRcop, para=uv, ctype="checkerboard") # 0.4952427

## End(Not run)

# All other example suites shown below are dependent on the pseudo-data in the

# variable uv. It is suggested to not run with a sample size much larger than the

# above n=34 if the Bernstein comparison is intended (wanted) simply because of

# lengthy(!) run times, but the n=34 does provide a solid demonstration how the

# level curves for berstein weights are quite smooth.

## Not run:

# Now let us construct as many as three sets of level curves to the sample

# resided in the uv sample from above using the PSP copula.

level.curvesCOP(cop=PSP); # TRUE, parametric, fast, BLACK CURVES

# Empirical copulas can consume lots of CPU.

# RED CURVES, if n is too small, uniroot() errors might be triggered and likely

# will be using the sample size of 34 shown above.

level.curvesCOP(cop=EMPIRcop, para=uv, delu=0.03, col=2, ploton=FALSE)

# GREEN CURVES (large CPU committment)

# Bernstein progress is uninformative because level.curvesCOP() has taken over control.

bpara <- list(para=uv, ctype="bernstein", bernprogress=FALSE)

level.curvesCOP(cop=EMPIRcop, para=bpara, delu=0.03, col=3, ploton=FALSE)

# The delu is increased for faster execution but more important,

# notice the greater smoothness of the Bernstein refinement.

## End(Not run)

## Not run:

# Experimental from R Graphics by Murrell (2005, p.112)

"trans3d" <- # blackslashes seem needed for the package

function(x,y,z, pmat) { # for user manual building but bad syntax

tmat <- cbind(x,y,z,1) %*% pmat # because remember the percent sign is a

return(tmat[,1:2] / tmat[,4]) # a comment character in LaTeX.

}

the.grid <- EMPIRgrid(para=uv, ctype="checkerboard")

the.diag <- diagCOP(cop=EMPIRcop, para=uv, ploton=FALSE, lines=FALSE)

the.persp <- persp(the.grid$empcop, theta=-25, phi=20,

xlab="U VARIABLE", ylab="V VARIABLE", zlab="COPULA C(u,v)")

the.trace <- trans3d(the.diag$t, the.diag$t, the.diag$diagcop, the.persp)

lines(the.trace, lwd=2, col=2) # The diagonal of the copula

# The following could have been used as an alternative to call persp()

the.persp <- persp(x=the.grid$u, y=the.grid$v, z=the.grid$empcop, theta=-25, phi=20,

xlab="U VARIABLE", ylab="V VARIABLE", zlab="COPULA C(u,v)")

lines(the.trace, lwd=2, col=2) # The diagonal of the copula #

## End(Not run)

Data Frame Representation of the Bivariate Empirical Copula

Description

Generate an R data.frame representation of the bivariate empirical copula (Salvadori et al., 2007, p. 140) using the coordinates as preserved in the raw data in the parameter object of the bivariate empirical copula.

Usage

EMPIRcopdf(para=NULL, ...)

Arguments

para |

A vector (single element) of parameters—the U-statistics of the data (see example) to pass to |

... |

Additional arguments to pass to |

Value

An R data.frame of u, v, and \mathbf{C}_{n}(u, v) values of the bivariate empirical copula is returned.

Author(s)

W.H. Asquith

References

Salvadori, G., De Michele, C., Kottegoda, N.T., and Rosso, R., 2007, Extremes in Nature—An approach using copulas: Springer, 289 p.

See Also

Examples

## Not run:

psp <- simCOP(n=39, cop=PSP, ploton=FALSE, points=FALSE) * 150

# Pretend psp is real data, the * 150 is to clearly get into an arbitrary unit system.

# The sort=FALSE is critical in the following two calls to pp() from lmomco.

fakeU <- lmomco::pp(psp[,1], sort=FALSE) # Weibull plotting position i/(n+1)

fakeV <- lmomco::pp(psp[,2], sort=FALSE) # Weibull plotting position i/(n+1)

uv <- data.frame(U=fakeU, V=fakeV) # our U-statistics

empcop <- EMPIRcopdf(para=uv)

plot(empcop$u, empcop$v, cex=1.75*empcop$empcop, pch=16,

xlab="U, NONEXCEEDANCE PROBABILITY", ylab="V, NONEXCEEDANCE PROBABILITY")

# Dot size increases with joint probability (height of the copulatic surface).

points(empcop$u, empcop$v, col=2) # red circles

## End(Not run)

Grid of the Bivariate Empirical Copula

Description

Generate a gridded representation of the bivariate empirical copula (see EMPIRcop, Salvadori et al., 2007, p. 140). This function has the primary intention of supporting 3-D renderings or 2-D images of the copulatic surface, but many empirical copula functions in copBasic rely on the grid of the empirical copula—unlike the functions that support parametric copulas.

Usage

EMPIRgrid(para=NULL, deluv=0.05, verbose=FALSE, ...)

Arguments

para |

A vector (single element) of parameters—the U-statistics of the data (see example); |

deluv |

A delta value of the both the |

verbose |

A logical controlling whether the progress during grid building is to be shown; and |

... |

Additional arguments to pass to |

Value

An R list of the gridded values of u, v, and \mathbf{C}_{n}(u,v) values of the bivariate empirical copula is returned. (Well only \mathbf{C}_{n}(u,v) is in the form of a grid as an R matrix.) The deluv used to generated the grid also is returned.

Note

The extensive suite of examples is included here because the various ways that algorithms involving empirical copulas can be tested. The figures also provide excellent tools for education on copulas.

Author(s)

W.H. Asquith

References

Salvadori, G., De Michele, C., Kottegoda, N.T., and Rosso, R., 2007, Extremes in Nature—An approach using copulas: Springer, 289 p.

See Also

Examples

## Not run:

# EXAMPLE 1:

psp <- simCOP(n=490, cop=PSP, ploton=FALSE, points=FALSE) * 150

# Pretend psp is real data, the * 150 is to clearly get into an arbitrary unit system.

# The sort=FALSE is critical in the following two calls to pp() from lmomco.

fakeU <- lmomco::pp(psp[,1], sort=FALSE) # Weibull plotting position i/(n+1)

fakeV <- lmomco::pp(psp[,2], sort=FALSE) # Weibull plotting position i/(n+1)

uv <- data.frame(U=fakeU, V=fakeV) # our U-statistics

# The follow function is used to make 3-D --> 2-D transformation